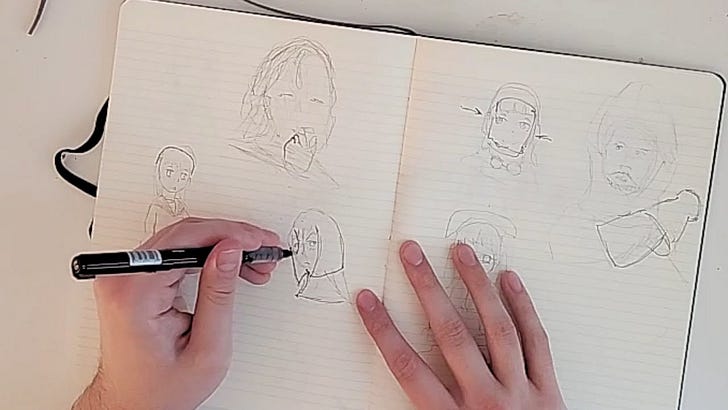

I drew physically for 30 days. Here's what I learned

Plus, more links to make you a little bit smarter today.

I drew physically for 30 days. Here's what I learned

I used Obsidian for 90 days. Here’s what happened.

For years, I was begging note-taking apps to start using a wiki-like hypertext system. At that point I wanted it more to plan out my fiction writing, but as time grew I realized a personal wiki could be used for just about anything.

Unveiling the factors of aesthetic preferences with explainable AI

The allure of aesthetic appeal in images captivates our senses, yet the underlying intricacies of aesthetic preferences remain elusive. In this study, we pioneer a novel perspective by utilizing several different machine learning (ML) models that focus on aesthetic attributes known to influence preferences. Our models process these attributes as inputs to predict the aesthetic scores of images. Moreover, to delve deeper and obtain interpretable explanations regarding the factors driving aesthetic preferences, we utilize the popular Explainable AI (XAI) technique known as SHapley Additive exPlanations (SHAP). Our methodology compares the performance of various ML models, including Random Forest, XGBoost, Support Vector Regression, and Multilayer Perceptron, in accurately predicting aesthetic scores, and consistently observing results in conjunction with SHAP. We conduct experiments on three image aesthetic benchmarks, namely Aesthetics with Attributes Database (AADB), Explainable Visual Aesthetics (EVA), and Personalized image Aesthetics database with Rich Attributes (PARA), providing insights into the roles of attributes and their interactions. Finally, our study presents ML models for aesthetics research, alongside the introduction of XAI. Our aim is to shed light on the complex nature of aesthetic preferences in images through ML and to provide a deeper understanding of the attributes that influence aesthetic judgements.

Publicly-Detectable Watermarking for Language Models

We present a publicly-detectable watermarking scheme for LMs: the detection algorithm contains no secret information, and it is executable by anyone. We embed a publicly-verifiable cryptographic signature into LM output using rejection sampling and prove that this produces unforgeable and distortion-free (i.e., undetectable without access to the public key) text output. We make use of error-correction to overcome periods of low entropy, a barrier for all prior watermarking schemes. We implement our scheme and find that our formal claims are met in practice.

TimeGPT-1

In this paper, we introduce TimeGPT, the first foundation model for time series, capable of generating accurate predictions for diverse datasets not seen during training. We evaluate our pre-trained model against established statistical, machine learning, and deep learning methods, demonstrating that TimeGPT zero-shot inference excels in performance, efficiency, and simplicity. Our study provides compelling evidence that insights from other domains of artificial intelligence can be effectively applied to time series analysis. We conclude that large-scale time series models offer an exciting opportunity to democratize access to precise predictions and reduce uncertainty by leveraging the capabilities of contemporary advancements in deep learning.

PROMPTWIZARD: TASK-AWARE PROMPT OPTIMIZATION FRAMEWORK

Large language models (LLMs) have transformed AI across diverse domains, with prompting being central to their success in guiding model outputs. However, manual prompt engineering is both labor-intensive and domain-specific, necessitating the need for automated solutions. We introduce PromptWizard, a novel, fully automated framework for discrete prompt optimization, utilizing a self-evolving, self-adapting mechanism. Through a feedback-driven critique and synthesis process, PromptWizard achieves an effective balance between exploration and exploitation, iteratively refining both prompt instructions and in-context examples to generate human-readable, task-specific prompts. This guided approach systematically improves prompt quality, resulting in superior performance across 45 tasks. PromptWizard excels even with limited training data, smaller LLMs, and various LLM architectures. Additionally, our cost analysis reveals a substantial reduction in API calls, token usage, and overall cost, demonstrating PromptWizard’s efficiency, scalability, and advantages over existing prompt optimization strategies.

A Human-Like Reasoning Framework for Multi-Phases Planning Task with Large Language Models

Recent studies have highlighted their proficiency in some simple tasks like writing and coding through various reasoning strategies. However, LLM agents still struggle with tasks that require comprehensive planning, a process that challenges current models and remains a critical research issue. In this study, we concentrate on travel planning, a Multi-Phases planning problem, that involves multiple interconnected stages, such as outlining, information gathering, and planning, often characterized by the need to manage various constraints and uncertainties. Existing reasoning approaches have struggled to effectively address this complex task [54]. Our research aims to address this challenge by developing a human-like planning framework for LLM agents, i.e., guiding the LLM agent to simulate various steps that humans take when solving Multi-Phases problems. Specifically, we implement several strategies to enable LLM agents to generate a coherent outline for each travel query, mirroring human planning patterns. Additionally, we integrate Strategy Block and Knowledge Block into our framework: Strategy Block facilitates information collection, while Knowledge Block provides essential information for detailed planning. Through our extensive experiments, we demonstrate that our framework significantly improves the planning capabilities of LLM agents, enabling them to tackle the travel planning task with improved efficiency and effectiveness. Our experimental results showcase the exceptional performance of the proposed framework; when combined with GPT-4-Turbo, it attains 10× the performance gains in comparison to the baseline framework deployed on GPT-4-Turbo.